Eve is here. This work is interesting. Using Twitter as a basis for research, the study found that “conservatives” were sharing news more persistently to counter platforms that sought to curb the spread. If you read about the methods Twitter has put in place to prevent the spread of “misinformation,” you’ll see that it relies on nudge theory. Definition from Wikipedia:

Nudge theory is a concept in behavioral economics, decision making, behavioral policy, social psychology, consumer behavior, and related behavioral sciences that uses a decision-making environment as a way to influence the behavior and decisions of groups or groups. We propose an adaptive design (selection architecture). Individual. Nudges are in contrast to other methods of achieving compliance, such as education, law, and enforcement.

Nowhere in the article is it considered that these measures amount to soft censorship. Evidently all is fair in attempts to counter “misinformation.”

Written by Daniel Elshoff, Assistant Professor, UCL School of Management, University College London. Juan S. Morales, Associate Researcher, University of Toulouse and Associate Professor, Department of Economics, Wilfrid Laurier University; It was first published in Vox EU

Ahead of the 2020 US presidential election, Twitter changed its user interface for sharing social media posts in an effort to slow the spread of misinformation. This column uses extensive data on U.S. media tweets to examine how platform changes have affected the spread of news on Twitter. Overall, the policy significantly reduced news sharing, but the amount of the reduction varied by ideology. As the Conservatives responded less to the intervention, content sharing fell significantly more in left-wing news outlets than in right-wing news outlets.

Social media provides important access points to information on a variety of important topics, including politics and health (Aridor et al. 2024). While social media reduces the cost of consumer information search, its potential for amplification and dissemination can also contribute to the spread of misinformation, disinformation, hate speech, and out-group hostility. (Giaccherini et al. 2024, Vosoughi et al. 2018, Muller and Schwartz 2023, Allcott and Gentzkow 2017). Political polarization is increasing (Levy 2021). and foster the rise of extreme politics (Zhuravskaya et al. 2020). Mitigating the spread and impact of harmful content is a key policy concern for governments around the world and an important aspect of platform governance. At least since the 2016 presidential election, the U.S. government has charged platforms with reducing the spread of false or misleading information in advance of elections (Ortutay and Klepper 2020).

Top-down and bottom-up regulation

Important questions about how to achieve these goals remain unanswered. Broadly speaking, platforms take one of two approaches to this problem. (1) “Top-down” regulation can be pursued by manipulating users’ access and visibility to different types of information. or (2) pursue “bottom-up” user-centered regulation by changing user interface features to encourage users to stop sharing harmful content.

The advantage of a top-down approach is that the platform has more control. Ahead of the 2020 election, Meta began modifying user feeds to ensure that users saw less of certain types of extreme political content (Bell 2020). Ahead of the 2022 US midterm elections, Meta fully implemented new default settings for user newsfeeds that included less political content (Stepanov 2021). 1 Although these policy approaches are effective, they raise concerns about the extent to which platforms can directly manipulate information flows and bias users in favor of or against particular political views. Moreover, top-down intervention without transparency risks user backlash and loss of trust in the platform.

Alternatively, a bottom-up approach to reducing the spread of misinformation involves relinquishing some control to encourage users to change their behavior (Guriev et al. 2023 ). For example, platforms can provide fact-checking services for political posts or provide warning labels for sensitive or controversial content (Ortutay 2021). In a series of online experiments, Guriev et al. (2023) show that warning labels and fact checks on platforms reduce the sharing of misinformation by users. However, the effectiveness of this approach can be limited and requires significant platform investment in fact-checking capabilities.

Twitter User Interface Changes in 2020

Another frequently suggested bottom-up approach is for platforms to slow the flow of information, especially misinformation, by encouraging users to carefully consider the content they share. In October 2020, a few weeks before the US presidential election, Twitter changed the functionality of its “retweet” button (Hatmaker 2020). A modified button prompted users to use “Quote Tweet” instead when sharing a post. The hope was that the changes would make users think twice about the content they’re sharing and slow the spread of misinformation.

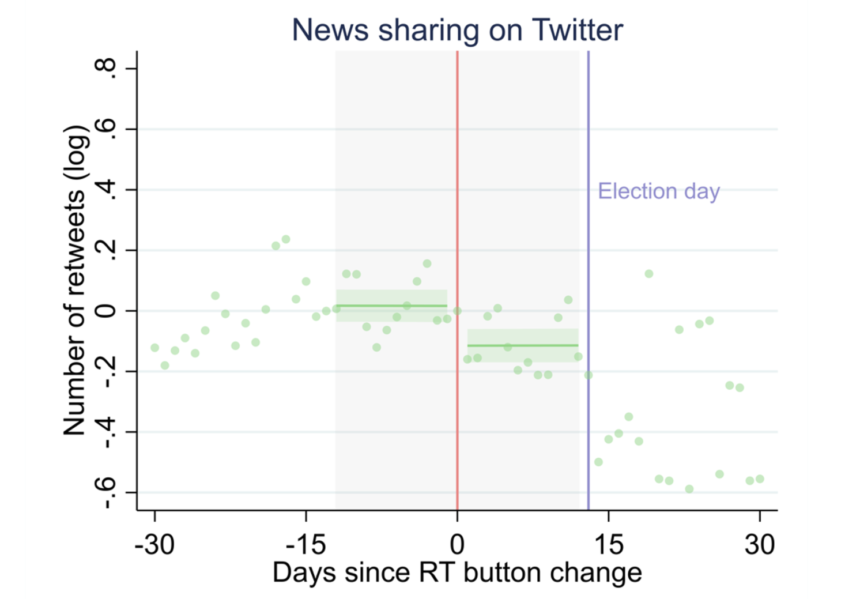

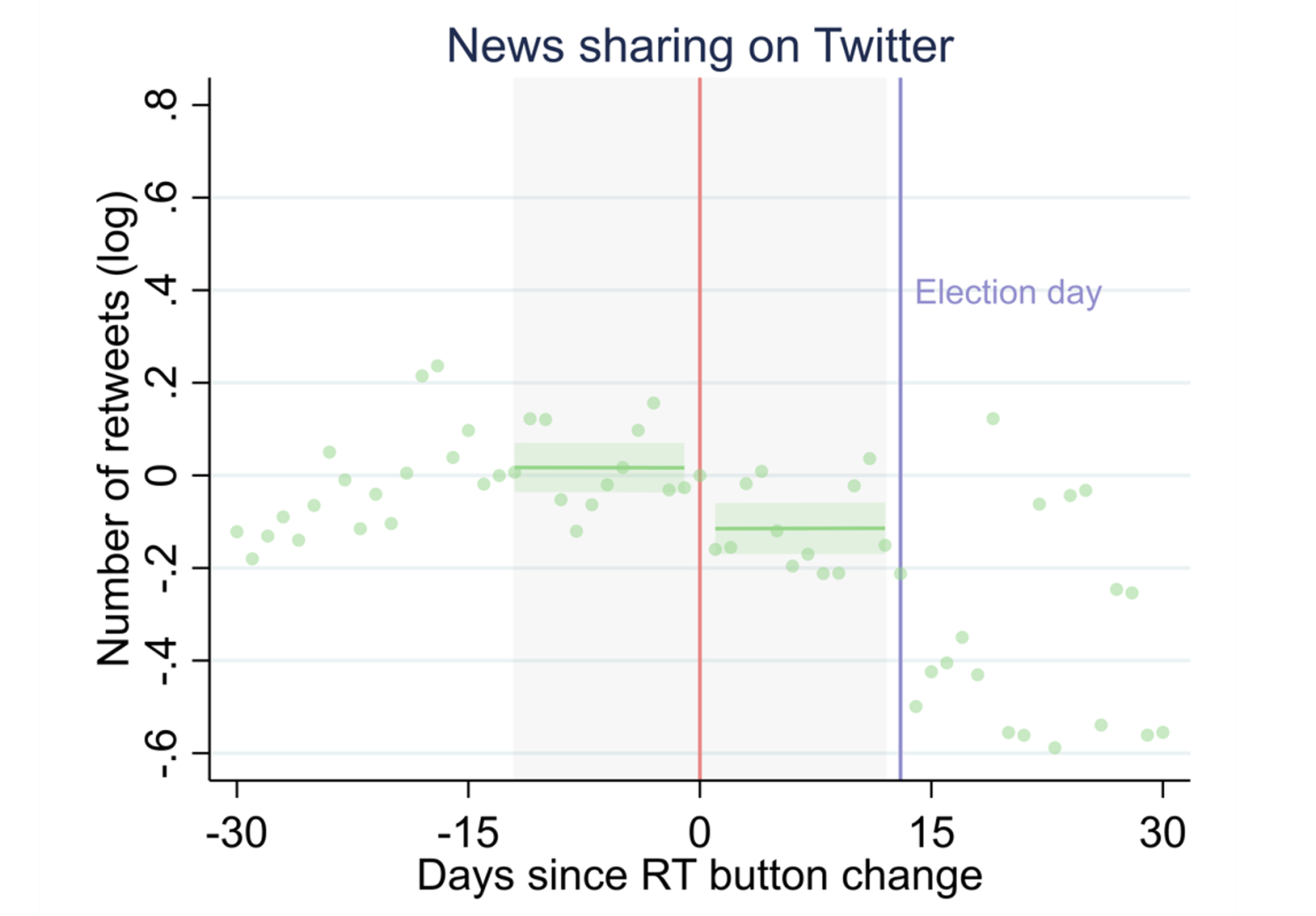

A recent paper (Ershov and Morales 2024) investigates how changes to Twitter’s user interface have affected the spread of news on the platform. Many news organizations and political organizations use Twitter to promote and promote their content, so this change was particularly notable because it could reduce consumer access to misinformation. We collected Twitter data from popular U.S. news organizations and investigated what happened to retweets immediately after the changes were implemented. Our research shows that this simple adjustment to the retweet button had a huge impact on spreading the news. On average, news organizations’ retweets decreased by more than 15% (see Figure 1).

Figure 1 News sharing and Twitter user interface changes

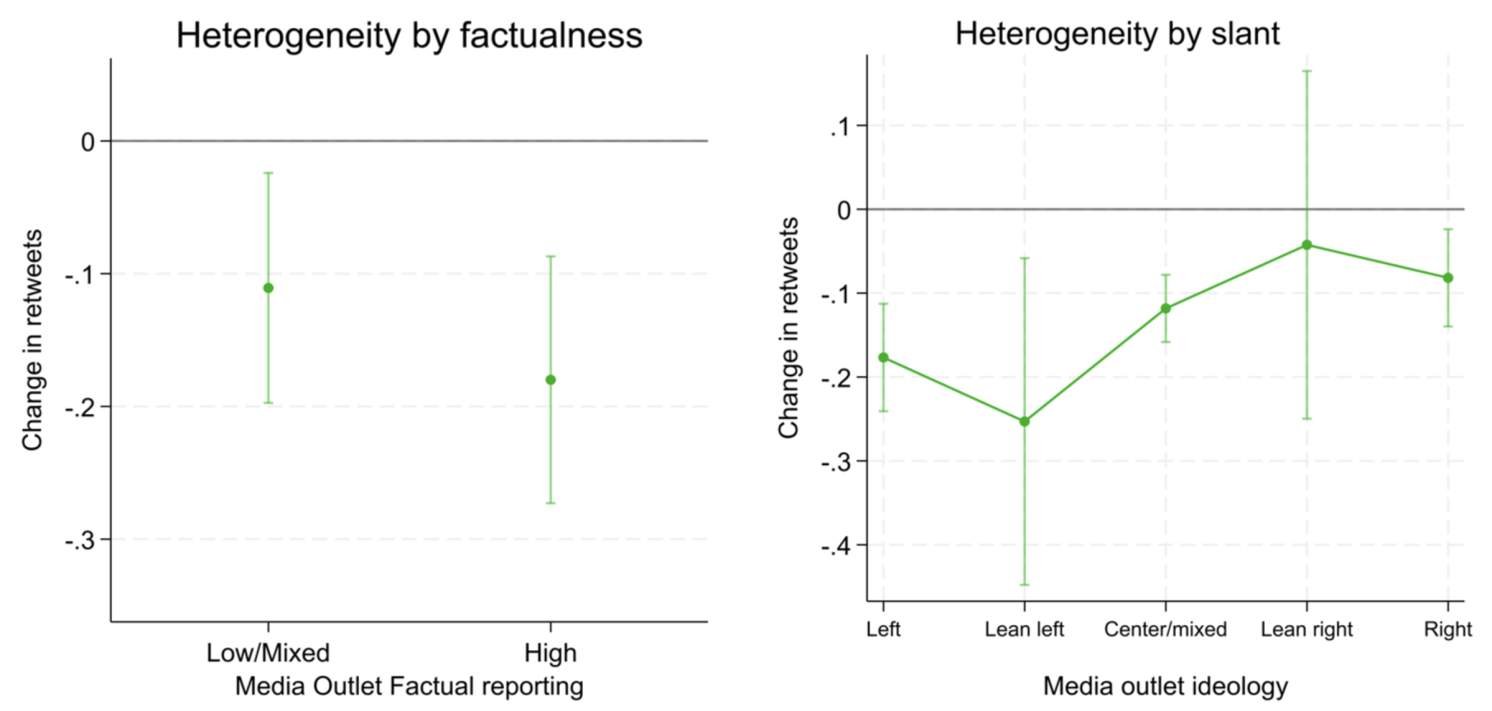

Perhaps more interesting is to investigate whether this change affected all news organizations to the same extent. In particular, we will first investigate whether “less factual” media (as classified by third-party organizations), where misinformation is more common, were more affected by Twitter’s intended changes. . Our analysis reveals that this was not the case. The impact on these news outlets was not as great as on news outlets with better journalistic quality. Rather, the effect was small. Furthermore, similar comparisons revealed that left-wing news organizations (also classified by third parties) were significantly more affected than right-wing news organizations. The average decline in retweets for liberal news organizations was about 20%, while the decline for conservative news organizations was only 5% (Figure 2). These results suggest that Twitter’s policy has failed. The reason for this is not only because the spread of misinformation has not been reduced compared to factual news, but also because political news from one ideology spreads more slowly than from another, which can exacerbate political divisions. This is also because there is.

Figure 2 Heterogeneity due to factuality and bias of exit

We investigate the mechanisms behind these effects and examine the characteristics of different media outlets, media outlets’ criticism of “Big Tech,” the heterogeneous presence of bots, and the influence of tweets on sentiment and predicted virality. We discount a range of potential alternative explanations, including variation in content. . We conclude that the reason this policy likely had a disproportionate effect is simply that conservative news sharing users were less responsive to Twitter nudges. Using an additional dataset of individual users who share news on Twitter, we observed that conservative users changed their behavior significantly less than liberal users after the change. In other words, it seems likely that conservatives will ignore Twitter’s prompts and continue sharing content as before. As additional evidence for this mechanism, we show similar results in a nonpolitical setting. Tweets by NCAA football teams from colleges in primarily Republican counties were less affected by user interface changes than tweets by teams in Democratic counties.

Finally, using web traffic data, we find that Twitter’s policies influence visits to these news organizations’ websites. After changing the retweet button, traffic from Twitter to media outlets’ websites decreased, but the decline was disproportionate to liberal news outlets. These off-platform spillovers confirm the importance of social media platforms to overall information dissemination and highlight the potential risks that platform policies pose to news consumption and public opinion.

conclusion

Bottom-up policy changes to social media platforms need to take into account the fact that the effects of new platform designs can vary widely depending on user type, and this can lead to unintended consequences. Social scientists, social media platforms, and policy makers are exploring these nuances with the goal of improving their design to foster informed and balanced conversations that lead to healthy democracies. We need to work together to analyze and understand the impact.

look original post For reference