Yves here. Educated reader have been trained to treat peer reviewed papers with far more respect than presumed-to-be-not-verified research. Below, KLG explains why the peer research standard was never quite what it aspired to be and has deteriorated under money pressures at publishers and financial conflicts of interest of investigators.

KLG’s overview:

Peer review is the “gold standard” (I really dislike that locution, almost as much as “deliverable”) that proves the worth of scientific publication. It has never been perfect. Nor has it ever been without controversy. The original contributors to the first scientific journal, Philosophical Transactions of the Royal Society, took a while to get it right. But overall peer review has served science well.

However, it has become strained over the past 25+ years as the business of scientific publishing has hypertrophied beyond all reason (except for making money). This post describes a few recent failures of peer review. My sense, from reading the literature and the CV’s of scientists applying for jobs and promotion, is these examples are quite common.

While there can be no single cure for the problem, bringing peer review out of the shadows of anonymity, where poor work and worse can hide, is the best solution currently imaginable. In the meantime, read the “peer reviewed” scientific and other scholarly literature with care.

One on my most difficult tasks in my day job is convincing medical students that just because something is published in a peer reviewed journal does not mean it presents the truth so far as we can know it. At times published papers approach Harry Frankfurt’s conception of bullshit: The authors don’t care if the article is true or not, only that it gets published. A few guidelines are included for the general reader.

By KLG, who has held research and academic positions in three US medical schools since 1995 and is currently Professor of Biochemistry and Associate Dean. He has performed and directed research on protein structure, function, and evolution; cell adhesion and motility; the mechanism of viral fusion proteins; and assembly of the vertebrate heart. He has served on national review panels of both public and private funding agencies, and his research and that of his students has been funded by the American Heart Association, American Cancer Society, and National Institutes of Health

The first question asked when discussing a scientific publication is, “Has this paper been peer reviewed?” If the answer is “no,” then true or not, right or wrong, the paper has no standing. During the first years of the HIV/AIDS epidemic this was never a question. Those of us in the lab awaited every weekly issue of Nature and Science and biweekly issue of Cell to learn the latest. There were a few false starts and some backbiting and competition and the clash of titans about who discovered HIV, but the authority of these publications was seldom in doubt. It was a different era.

Forty years later biomedical publishing has outgrown itself (i.e., 436,201 Covid publications in PubMed as of 27 August 2024 and no real signs the pandemic is “resolved”), especially as publishers new and old have taken advantage of the internet to “publish” journals online across the scientific spectrum. On the one hand, online open access has been a boon to scientists and their readers with more outlets available. On the other, it has often become impossible to distinguish the wheat from the chaff, as all indications are that peer review has suffered as a concomitant of this growth. The general outlines of this change in the scientific literature were covered earlier this year. Here I want to illustrate how this manifestation of Gresham’s Law has influenced peer review, which can be defined as the anonymous, reasoned criticism of a scientific manuscript or grant application by expert peers who are equipped to do so. I have been the reviewer and the reviewed since the mid-1980s, mostly with fair results in both directions (but there is one grant reviewer I still would like to talk to if given the chance). Now that my focus has changed, I do not miss it too much.

One of the larger “new” publishers with more than 400 titles is MDPI, which has had two names beneath the one abbreviation: First Molecular Diversity Preservation International that began as a chemical sample archive and now Multidisciplinary Digital Publishing Institute. MDPI journals cover all fields of science. Papers are reviewed rapidly and made available online quickly. The final “product” is indistinguishable in pdf from those of legacy journals that go back to the Philosophical Transactions of the Royal Society (1665). What follows is a summary of one scientist’s experience as an MDPI reviewer. As we have discussed here in another context, sometimes n = 1 is enough.

Rene Aquarius, PhD, is a postdoctoral scientist in the Department of Neurosurgery at Radboud University Medical Center in Nijmegen, The Netherlands. He recently described his experience as a reviewer for a special edition of the MDPI Journal of Clinical Medicine. One should note here this use of “special edition.” These expand the market, so to speak, for the publisher and its contributors, so they are common among many open access digital publishers. Titles used by these publishers also mimic those of legacy journals. In this case that would be the Journal of Clinical Investigation (1924), which has been the leading journal in clinical medicine for 100 years. It is published by the American Society for Clinical Investigation (ASCI). Arguments from authority are not necessarily valid as COVID-19 revealed early and often, but the ASCI has earned its authority in clinical research.

In November 2023, upon reading the manuscript (a single-center retrospective analysis of clinical cases) Dr. Aquarius immediately noticed several problems, including discrepancies between the protocol and final study, a target sample size larger than what was used, and a difference in minimum age for patients between the protocol and the manuscript. The statistical analysis was faulty in that it created a high probability of Type I errors (false positives) and the study lacked a control group, “which made it impossible to establish whether changes in a physiological parameter could really predict intolerance for a certain drug in a small subset of patients.” Dr. Aquarius could not recommend publication. Reviewer 2 thought the paper should be accepted after minor revision.

The editorial decision was “reject, with a possibility of resubmission after extensive revisions.” These extensive revisions were returned only two days after the immediate rejection; my revisions, extensive or not, have usually required several weeks at a minimum. Before he could begin his review of the revised manuscript, Dr. Aquarius was notified that his review was no longer needed because the editorial office already had enough peer reviewers for this manuscript.

But Dr. Aquarius reviewed the revision anyway and found it had indeed undergone extensive revisions in those two days. As he put it, the “biggest change…was also the biggest red flag. Without any explanation the study had lost almost 20% of its participants.” And none of the issues raised in his original review had been addressed. It turned out that another peer reviewer had rejected the manuscript with similar concerns. Two other reviewers accepted the manuscript with minor revisions. Still, the editor rejected the manuscript after fifteen days, from start to finish.

But the story did not end there. A month later Dr. Aquarius received an invitation to review a manuscript for the MDPI journal Geriatrics. Someone in the editorial office apparently goofed by including him as a reviewer. It was the same manuscript that must have been shifted internally through the transfer service of MDPI. The manuscript had also reverted to its original form, although without the registered protocol and with an additional author. Analysis of patient data without formal approval by an Institutional Review Board or equivalent is never acceptable. Dr. Aquarius rejected the paper yet again, and shortly thereafter the editor decided to withdraw the manuscript. So far, so good. Peer review worked. And then in late January 2024, according to Dr. Aquarius, the manuscript was published in the MDPI journal Medicina.

How and why? Well, the article processing charge (APC) for Journal of Clinical Medicine was 2600 Swiss Francs in 2023 (~$2600 in August 2024). The charges were CHF 1600 and CHF 2200 for Geriatrics and Medicina, respectively. Nice work if you can get it, for the authors who got a paper published and the publisher who collected several thousand dollars for their trouble. “Pixels” are not free but they are a lot cheaper than paper and ink and postage. But what does this example, which as a general proposition is quite believable, say about MDPI as a scientific publisher?

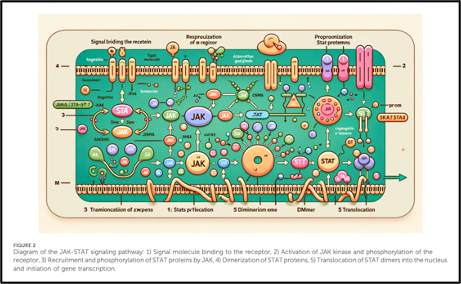

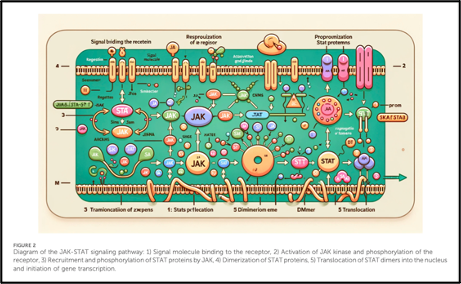

Another recent case of suspect peer review was made public immediately after publication earlier this year in the journal Frontiers in Cell and Developmental Biology. Frontiers is “Where Scientists Empower Society, Creating Solutions for Healthy Lives on a Healthy Planet.” Frontiers currently publishes 232 journals, from Frontiers in Acoustics to Frontiers in Water. The paper in question was entitled “Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway,” a recondite title for a general sudience but a topic of interest to any cell biologist working on stem cells or signal transduction.

As with the previous example, the time from submission to acceptance was relatively short, from 17 November to 28 December 2023. No more than two days after publication a firestorm erupted and the publication was withdrawn by the publisher soon after. It turned out the paper was very likely written using ChatGPT or equivalent Algorithmic Intelligence (AI). It was nothing but twelve pages of nonsense, with reasonable-sounding text at first glance but figures undoubtedly drawn by AI that were nothing but pure gibberish. The paper itself has vanished into the ether, apparently deleted without a trace by the publisher. In anticipation of this I saved a pdf and would share if there were an easy mechanism to do so. This link gives a general sense of the entire ridiculous event, with illustrations. The AI drawing of the rodent is nonsensical and NPSFW, not particularly safe for work. The thing is, this manuscript passed peer review, and the editor and peer reviewers are listed on the front page. They all agreed that this Figure 2 is legitimate science. The other figures are just as ridiculous.

How could this have gotten through anything resembling good editorial practice and functional peer review? The only answer is that it was passed through the process without a second look by the editor or either of the two reviewers. So, is n = 1 enough here, too, when it comes to Frontiers journals? No one will get any credit for “Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway” because it has been scrubbed. But the paper reviewed and rejected for apparent good reason by Dr. Aquarius has been published. More importantly it will be counted. It will also still be faulty.

Is there an answer to this crisis in peer review? (1) Yes, for peer review to function properly, it must be out in the open. Peer reviewers should not be anonymous. Members of the old guard will respond that younger (i.e., early career) peer reviewers will be reluctant to criticize their elders, who will undoubtedly have power by virtue of their positions. This is not untrue, but well-reasoned critiques that address strengths and weaknesses of a manuscript or a grant application will be appreciated by all, after what would be a short period of adaptation. This would also level the “playing field.”

Of course, this requires that success rates for grant applications rise above the10-20 percent that is the current range for unsolicited investigator-initiated applications to the National Institutes of Health (NIH). In a game of musical chairs with career implications, good will and disinterestedness cannot be assumed. In my long experience it has become clear that the top third of a pool of applications should get funded because there are no objective distinctions among them, while the middle third should get funded upon revision. The latter third will remain hopeless for the duration and are not reviewed by the full panel. In any case, the data are clear that NIH grants in the “top 20%” are indistinguishable in impact measured by citation of the work produced and papers published. I anticipate that this would extend to 30% if the authors of this paper repeated their analysis with a more current dataset. For those interested in a comprehensive treatment of modern science, this book by the authors of this paper is quite good, but expensive. Ask your local library to get it for you!

A recent article on non-anonymous peer review was written by Randy Robertson, an English Professor at Susquehanna University: Peer review will only do its job if referees are named and rated (registration required). Of all things, the paper that led him down this path was one that stated “erect penile length increased 24 percent over the past 29 years.” I think Professor Robertson is correct on peer review, but he also inadvertently emphasized several deficiencies in the current scientific literature.

What did Professor Robertson find when he read this “systematic review and meta-analysis”? The authors claim they included studies in which the investigators did the measurements, but three of the largest studies included papers in which the measurements were “self-reported.” Yes, I laughed at “self-reported,” too. Neither were the method(s) of measurement described. I don’t even want to think about that. Robertson wrote to the editors and corresponding authors, who acknowledged the problems and stated they would revise the article. After months the correction has not been published. The World Journal of Men’s Health, new to me, is published by the Korean Society for Sexual Medicine and Andrology, which may be organized only for the publication of this journal. The authors, however, are from universities in Italy, Milan and Rome (Sapienza), and Stanford University and Emory University in the United States. Heady company.

This paper seems frivolous, but perhaps it is not. However, it does not support what it purports. Professor Robertson also misses the mark when he states that a meta-analysis is the “gold standard” in science. It may be the standard in Evidence-Based Medicine, but reviews and meta-analyses are by definition secondary, at least once removed from primary results. The question raised here is whether caveat lector must be our guide for reading the scientific literature, or any scholarly literature, when publish-or-perish along with publish-and-still-perish, rule? This is not tenable. If each reader must also be a peer reviewer, then peer review has no meaning.

Robertson is correct when he states that effective review before publication is superior to post-publication “curation” online, which will leave us “awash in AI-generated pseudo-scholarship.” See above for an egregious example. Good refereeing is not skimming so you can get back to your own work or rejecting a submission because you do not like the result, or that it encroaches on your territory. Good refereeing means “embracing the role of mentor” and “being generous and critical…it is a form of teaching.” This is certainly an academic and scholarly ideal but also worth remembering as the minimal requirement for legitimate publication of scientific research.

The biggest problem with peer review, aside from the fact that it is unpaid labor without which scholarly publishing could not exist, is that reviewing is unrewarded professionally and will remain so as long as it is anonymous. The stakes must be raised for reviewers. Frontiers journals do identify reviewers and editors, but it didn’t matter in the paper discussed above. When reviewers are known they will get credit, if not payment for services, and the entire process will become transparent and productive. It would also weed out the lazy, ineffective, and malicious. This would be a good thing. When high-quality reviews are recognized as the scholarship they are instead of mere “service” to the profession they become “an integral part of scholarly production, if book reviews merit a distinct CV section, so do peer reviews.” Would we be better off with a slightly slower science? The question answers itself. It is better to be right than first.

Finally, what is a layperson to do when reading the peer-reviewed scientific and other scholarly literature? Several rules of thumb come to mind that can improve our “spidey sense” about published science”:

- Read the acknowledgements. If the paper is biomedical or energy science, then how it was funded is critical.

- Note the time from submission to publication. If this is less than 6-8 weeks, caveat lector, indeed. Good editing and good reviewing take time, as does the analysis of images for evidence of manipulation (See, for example, Lesné, Sylvain).

- Identify the publisher. We do this all the time in our daily life. In These Times and the Wall Street Journal are predictable and useful. It is just as important in reading the scientific literature to know the underlying business model of the publication. Established legacy scientific publishers are not perfect, but they have survived for a reason. Journals published by widely recognized professional organizations are generally reliable.

- Do not automatically reject recent open access publishers, but remember the business model, again. Many of them exist primarily to collect article processing fees from scientists whose promotion and tenure committees can do nothing but count. This matters.

In the long run, either science for science’s sake will return or we will continue wandering in this wilderness. We would make the most progress by de-Neoliberalizing science and its publication along with everything else that has Undone the Demos. Suggestions are welcome.

Notes

(1) I am acutely aware that peer review has not always been fair. I have seen too much. But the breaches have been exceptions that prove the rule. Those who are found out are eventually ignored. I have reviewed for a dozen legacy journals (full disclosure: I have reviewed one paper for a Frontiers journal, which will be my last) and served on and chaired a review panel for a well-known non-governmental funding agency for more than ten years. I know that group cared deeply about being fair and constructive. Despite my frequent failures, I believe that most panels are fair. The problem is that careers sometimes perish before things even out.